通过加深网络把CIFAR10的训练精度提升到80%

条评论这次继续,在原来网络的基础上,加深了卷积层的数量,从原来的3层卷积,加深到了6层。核心代码如下:

model = models.Sequential() |

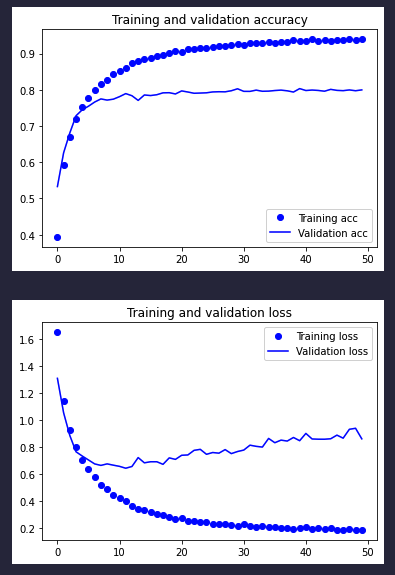

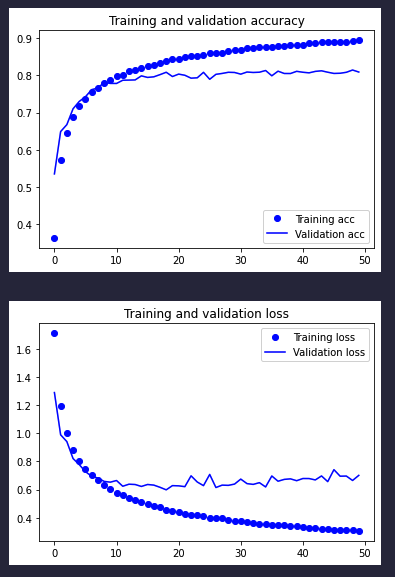

可以看到验证精度比之前的略好一些。但是程度非常有限,刚刚有接近80%的影子。下面我尝试增加Dropout层:

model = models.Sequential() |

在原来的基础上增加了两层Dropout,结果点进一步得到改善:

已经达到80%的水平了。

到这之后,我又发现一个自己代码的问题,之前修改代码的时候,去掉了最后卷积层之后的池化层,肯定是不妥的。我现在给加回来:

from keras import layers |

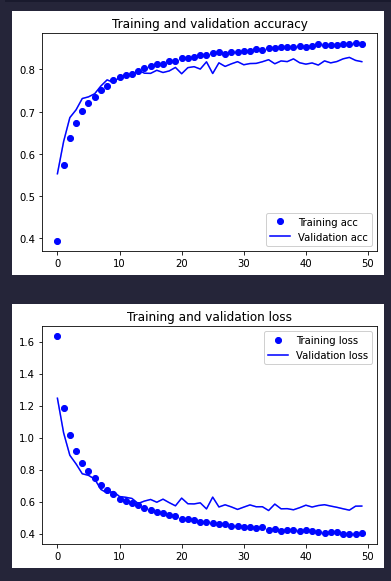

重新训练结果如下:

可以看到验证精度最后都能超过80%。只能说怪我了,卷积层后面不跟池化层的话数据就太大了,用池化层压缩一下,训练效果往往会更好。

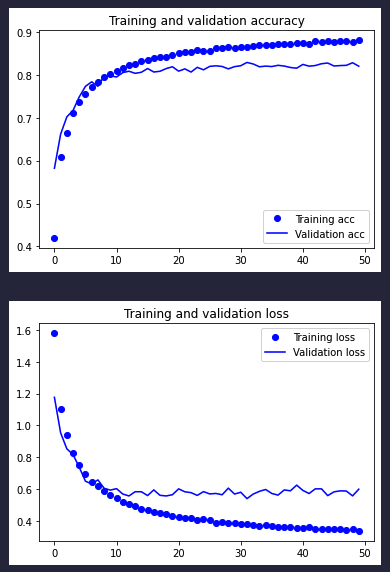

下面我又尝试把Flatten之后的全连接层给去掉,得到了更好的数据:

训练在12轮之后,验证精度就稳步在80%以上了。

最终代码如下:

from keras import layers |

本文标题:通过加深网络把CIFAR10的训练精度提升到80%

文章作者:牧云踏歌

发布时间:2022-04-15

最后更新:2022-04-15

原始链接:http://www.kankanzhijian.com/2022/04/15/cnn-cifar10-try-3-deep/

版权声明:本博客文章均系本人原创,转载请注名出处